Note : Please follow the provided link(s) in each project for full details. Also, some of the project title have hyperlink which navigates directly to the project page.

| Visualizing Deep Learning Optimization Algorithms |

|---|

| Description: Gradient based algorithms is the key to optimize the deep neural networks. Apart from the vanilla gradient descent algorithm, there exists many variant of gradient based algorithms which can improve the speed of convergence and can avoid local minima too. In this project, various Deep learning optimization algorithmswere studied and their speed of convergence were visualized using PyTorch. Visualization of various deep learning optimization algorithms implemented using PyTorch’s automatic differentiation tool and optimizers. It demonstrates how the iterative methods approaches to the minimum in the case of convex, non-convex surfaces and surfaces with saddle point. |

| Source Code: https://github.com/mynkpl1998/Deep-Learning-Optimization-Algorithms |

| Some visualizations: |

|

| Recurrent Deep-Q Learning |

|---|

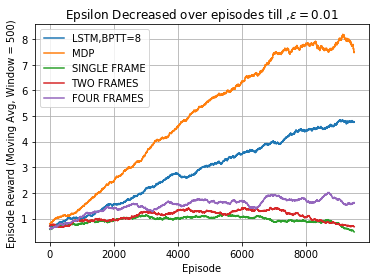

| Description: Partially Observable Markov Decision Process (POMDP) is a generalization of Markov Decision Process where agent cannot directly observe the underlying state and only an observation is available. Earlier methods suggests to maintain a belief (a pmf) over all the possible states which encodes the probability of being in each state. This quickly limits the size of the problem to which we can use this method. However, the paper Playing Atari with Deep Reinforcement Learning presented an approach which uses last 4 observations as input to the learning algorithm, which can be seen as 4th order markov decision process. Many papers suggest that much performance can be obtained if we use more than last 4 frames but this is expensive from computational and storage point of view (experience replay). Recurrent networks can be used to summarize what agent has seen in past observations. In this project, I investgated this using a simple Partially Observable Environment and found that using a single recurrent layer able to achieve much better performance than using some last k-frames. Source Code: https://github.com/mynkpl1998/Recurrent-Deep-Q-Learning Results: The figure given below compares the performance of different cases. MDP case is the best we can do as the underlying state is fully visible to the agent. However, the challenge is to perform better given an observation. The graph clearly shows the LSTM consistently performed better as the total reward per episode was much higher than using some last k-frames.  |

| A Deep Reinforcement Learning Framework |

|---|

| Description: This framework implements the various state of the art Value based Deep Reinforcement Learning algorithms, supports OpenAI gym environments out of the box. Implementation include Deep-Q learning, Deep-Q learning with target freezing, Prioritized experience replay, Double Q-learning. Source Code: https://github.com/mynkpl1998/Deep-RL-Framework |

| Asynchronous Actor-Critic (A3C) - Policy Gradients Methods |

|---|

| Description: High quality implementation of A3C algorithm with Generalized Advantage Estimation. Supports different neural network based policies out of the box. Performance of the algorithm scales linearly with the number of cores. Source Code: https://github.com/mynkpl1998/A3C/tree/dev Trained Policies:

|

| Deep learning for Physical layer |

|---|

| Description: We present and discuss the application of Deep Learning (DL) for the physical layer. By interpreting a communication system as an AutoEncoder, we develop a fundamental new way to think about communications system design as end-to-end reconstruction task that seeks to jointly optimize transmitter and receiver components in a single process. Simulations were done to illustrate the learning of the deep networks. Further, the results were compared with traditional methods. Report: https://drive.google.com/file/d/1q3Ba741gZxWAgv4t7ERwb5H93iajp2J-/view Modeling communication system as end-to-end learning system:

|

| Implemention of Convolutional and Recurrent neural networks inference for Heterogeneous Devices (GPU and FPGA) using OpenCL |

|---|

| Description: Many devices today have more than one processor other than CPUs to accelerate certain workloads. For example, an integrated graphics or DSPs in an embedded system. This creates an interesting opportunity for deep learning community to take advantage of it and use it to accelerate the inference/training process for edge devices. We attempted to implement the inference of some of the known architecture like Recurrent and Convolutional neural networks for FPGA and GPU in Open Compute Language trained on standard datasets. CNN Source Code: https://bitbucket.org/mynkpl1998/vgg_opencl/src/master/ RNN Source Code: https://bitbucket.org/mynkpl1998/rnn_opencl/src/master/ CNN Report: https://drive.google.com/file/d/1yDCyucwo6I5Z2bOd-tKOkEWo-ByrFHdc/view RNN Report: https://drive.google.com/file/d/1GzoLjMLM-rKMvbgFPtIJ7b3X_wivbxfw/view Results: The following screnshots shows the running time in case of GPU(left) and resource usage in FPGA(right).

|

| ATP-Predict |

|---|

| Description: We carried out a brief implementation of the paper Identification of ATP binding residues of a protein from its primary sequence and attempted to improve on the existing methods by using different machine learning techniques using an extended dataset and optimized parameters on the models. We obtained maximum cross-validation accuracy of around 0.64 on a balanced data-set, with window size 17. Project Web Page: https://mynkpl1998.github.io/atppredict/ |

| Cancer Prediction using Deep Neural Networks |

|---|

| Description: This project investigates the opportunities of applying the deep convolutional networks fordeveloping prediction model for cancer prediction. We selected high quality image dataset containing both benign and malignant examples. We build a classifier which used SIFT to extract the features from the images. However, we found that this naive approach could not able to perform well on our dataset. We then explored the space of Deep Learning techniques specifically Deep Convolutional Neural Networks. Report: https://drive.google.com/file/d/1W24dv9um3QGnfZsEoDO-jFaOmISeWiDF/view Results:

|

| Visualizing VGG16 feature maps in Keras |

|---|

| Description: This project impelements the code for visualizing the feature maps of VGG16 Convoutional Neural Network using Keras. Particular layer of the network can be visualized by defining it as an output layer. For brevity please check some examples given below. Source Code: https://github.com/mynkpl1998/visualize-vgg16 Feature maps:

|